By: Dr. Rachel Brown, NCSP

The purpose of universal benchmark screenings and progress monitoring is to improve all students’ learning outcomes. Such assessments are best used within a 5-step problem solving process that includes (a) problem identification, (b) problem analysis, (c) plan development, (d) plan implementation, and (e) plan evaluation. This (and other) models for school-based problem solving are intended to make the steps toward problem solutions explicit. This begins with problem identification, which is a primary goal of screening.

School-based problem solving is often implemented through one or more teams (e.g., grade level, building level) that meet regularly to support teachers—who, in turn, support students. Educators have the capacity to influence, and improve, students’ learning outcomes by reviewing and refining their practices each year. Educators should meet to interpret and use data to guide their practices. These meetings should occur immediately after each screening window. At that time, grade and school level teams should identify and analyze problems. At that time, those teams should also document a plan with measurable goals to solve or reduce problems (e.g., reduce the number of students below benchmark in reading). That plan should document how implementation is monitored and the specific goal. As we approach the spring screening window, school leaders and teachers should be prepared to evaluate and make decisions about this past year’s plans and efforts. It is almost time to decide how we will solve even more problems more effectively next year.

Annual Spring Review

The best time of year to review student support practices is in the spring of each school year. The reason that the spring works best is that it allows team members to reflect on and interpret student outcomes while the events of the current year are still fresh in everyone’s mind. By reviewing data in April or May of each school year, the team members can consider what has worked well during that school year and what needs to be changed. This is in contrast to waiting until the fall when most teachers will have entirely new students and might not recall as easily what practices need to change. Examples of possible changes include revising the school schedule to make sure there are enough minutes each day for both core instruction and additional intervention, or changing what interventions are used in relation to students’ learning needs. When teams arrange for such changes in the spring of the school year, they can be ready to go on the first day of school in the fall. Such planning prevents the loss of instructional time in the fall when a team might otherwise spend weeks deciding what to do for struggling students. The best time to conduct the annual spring data review is immediately after spring screening data are collected. Such data will provide a snapshot of the school’s overall success in meeting the needs of all students. If such a review after spring screening is not possible, teams are urged to review the available data from fall and spring screening as well as from progress monitoring and other forms of assessment used.

System-Level Data

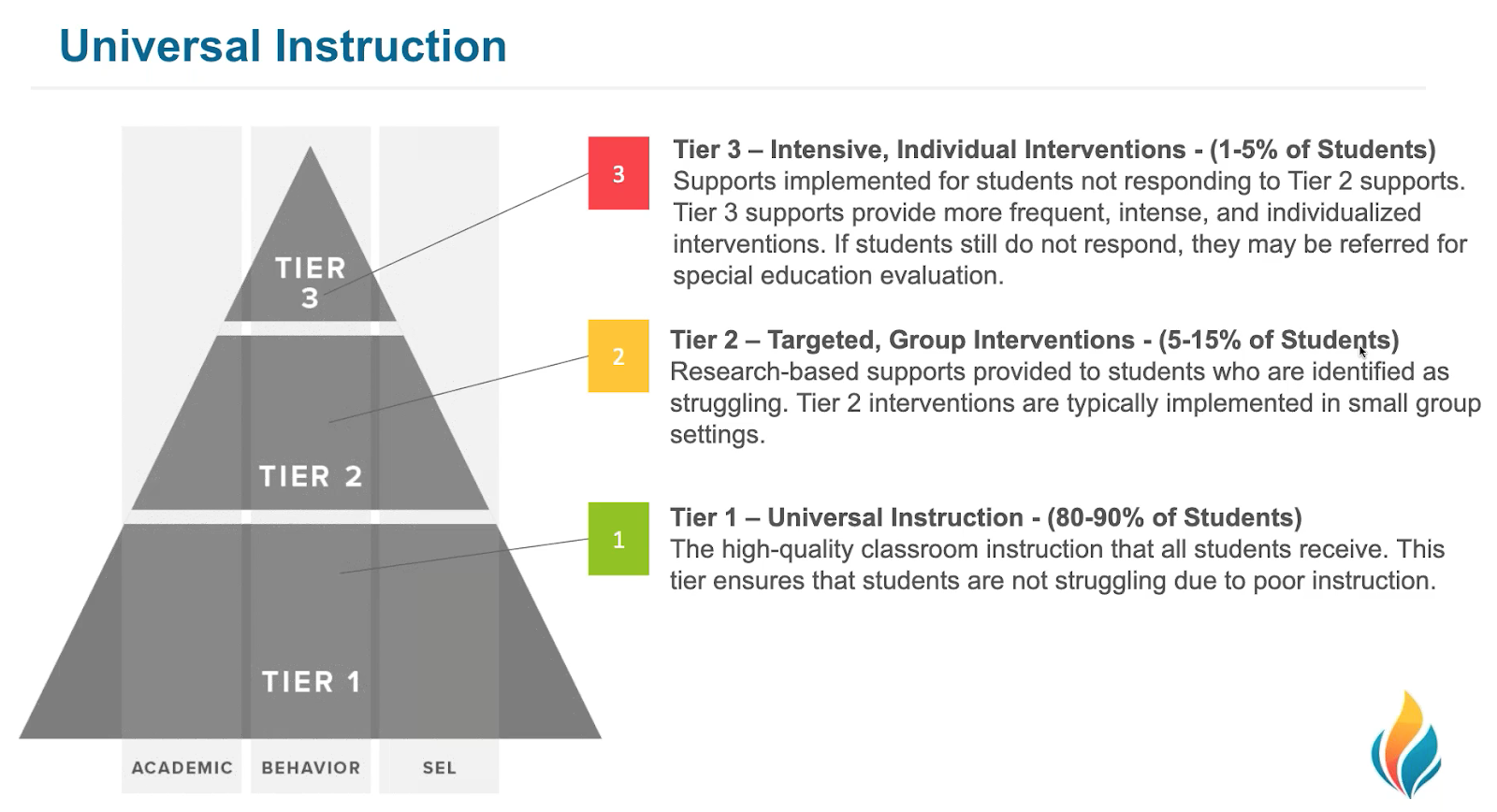

A second key to using student data to improve learning outcomes is to be sure that teams review “system” level information about student performance. While much of the time problem-solving teams focus heavily on individual student progress in order to make decisions about instructional supports, the annual spring data review is an important time to consider trends at the class, grade, and school levels. Data for groups can reveal the general effectiveness of current practices and show whether any specific teachers, grades, or buildings need to consider changes. Such data are often referred to as system-level indicators because they show how well the overall school or district practices are meeting the needs of all students. System-level review is important for teams because if the core (e.g., Tier 1) instruction is not effective for at least 80% of students, different methods of student support are needed (National Center for Response to Intervention, 2016). FastBridge Learning offers a number of group-level reports that teams can use to examine the effects of instruction. For example, the Group Screening Report, Group Growth Report, and Class Impact Report are all helpful tools. Through recognizing the strengths and weaknesses of a school’s overall efforts to support students, teams are in a better position to develop and implement practices that can benefit many students instead of solving problems one student at a time.

A second key to using student data to improve learning outcomes is to be sure that teams review “system” level information about student performance. While much of the time problem-solving teams focus heavily on individual student progress in order to make decisions about instructional supports, the annual spring data review is an important time to consider trends at the class, grade, and school levels. Data for groups can reveal the general effectiveness of current practices and show whether any specific teachers, grades, or buildings need to consider changes. Such data are often referred to as system-level indicators because they show how well the overall school or district practices are meeting the needs of all students. System-level review is important for teams because if the core (e.g., Tier 1) instruction is not effective for at least 80% of students, different methods of student support are needed (National Center for Response to Intervention, 2016). FastBridge Learning offers a number of group-level reports that teams can use to examine the effects of instruction. For example, the Group Screening Report, Group Growth Report, and Class Impact Report are all helpful tools. Through recognizing the strengths and weaknesses of a school’s overall efforts to support students, teams are in a better position to develop and implement practices that can benefit many students instead of solving problems one student at a time.

Early Intervention

The work done as part of an annual spring data review will bring many benefits in the fall of the following school year. Most importantly for students, teachers will be able to address their learning needs from the first day of school rather than waiting to provide interventions several weeks after the school year begins. The term early intervention is often used to describe targeted instruction provided for children at risk of later problems. For example, students at risk for reading problems can be provided with early intervention in the form of direct and systematic reading instruction that includes all five key areas of reading (National Reading Panel, 2000). Early intervention can have another meaning too. It can refer to implementing intervention services as early as possible in a school year so that students do not fall farther behind while waiting for support. Conducting annual spring data reviews using universal benchmark screening and progress monitoring scores for groups of students, with measures such as those provided by FastBridge Learning, makes it possible for teachers to start using the appropriate instructional methods and materials with all students. Although some students will move over the summer and during the school year, teachers and students still benefit from reviewing data in the spring and implementing new practices in the fall because this type of data review focuses on teaching activities that affect all students, not individuals.

As this school year comes to an end school teams can take advantage of the formative data collected throughout the school to determine if changes in student supports are needed. Such reviews are best done in the spring of each school year using aggregated data at the class, grade, and school level. This type of review helps teams to learn the effectiveness of multiple tiers of support (i.e., response to intervention [RTI]) used to meet the needs of all students. Whether at the grade or school level, a universal goal is for Tier 1 core instruction to be effective for at least 80% of students. When this is the case, teams can focus their attention on the types of interventions and progress measures that will be most helpful for small groups of students needing strategic (e.g., Tier 2) and intensive (e.g., Tier 3) intervention. If less than 80% of students are meeting benchmark goals then changes in Tier 1 core instruction are recommended. FastBridge Learning has a number of group-level reports that can assist school teams in looking at the results of their support efforts and establishing plans for the following school year.

References

Deno, S.L. (2013). Problem-solving assessment. In, R. Brown-Chidsey & K.J. Andren (Eds.), Assessment for intervention: A problem-solving approach (2nd ed.), pp. 10-38. New York: Guilford.

National Center on Response to Intervention. (2016). Multi-level prevention system. Retrieved from: http://www.rti4success.org/essential-components-rti/multi-level-prevention-system.

Dr. Rachel Brown is Associate Professor of Educational Psychology at the University of Southern Maine and also serves as FastBridge Learning's Senior Academic Officer. Her research focuses on effective academic assessment and intervention, including multi-tier systems of support.