By: Dr. Rachel Brown, NCSP

Role of State Assessments

Since 2001, in order to receive federal funding from the No Child Left Behind Act all US states have been required to conduct annual assessments of students in grades 3 through 8. Initially, most states developed their own tests and matched them to the state’s learning standards. The assessment landscape began to shift in 2008 when the National Governor’s Association (NGA) and the Council of Chief State School Officers (CCSSO) initiated discussions about creating a set of standards that could be adopted by all states. Importantly, the learning standards used in any given state must be determined by the state itself because the US federal government is barred from setting states’ school policies. The outcome of the efforts was the Common Core State Standards (CCSS). These standards were made available to states for adoption in 2010. By 2011 48 states had adopted the CCSS; eventually some states changed their adoption plans and as of 2016 42 states and 5 territories have adopted them (Common Core State Standards Initiative, 2016). Once the standards were adopted by a majority of states, the US Department of Education issued a request for proposals to develop assessments that are aligned with the CCSS. In early 2011 development grants were awarded to the Partnership for the Assessment of Readiness for College and Careers (PARCC) and the SMARTER Balanced Assessment Consortium (U.S. Department of Education, 2010). States were not required to select one of these new assessments, but some did. Other states maintained their already existing state assessments.

The state assessments, whether based on CCSS or not, are designed to provide information about the effectiveness of schools across the US. In some states, rules were passed that required students to pass the state exam in order to get a high school diploma. The attention to each state’s annual assessment has led some to refer to these tests as “high stakes” because important decisions about students could result from state test scores. There have been many critics of the state assessments. Teachers have often argued they are unfair because they do not take into account each student’s individual background. Some parents have complained that the tests are too hard or that using them to determine diploma eligibility is not appropriate. Thankfully, researchers realized that regardless of what test is being used in a state, there are ways to predict student performance by using other assessments earlier in the school year. The best way to find out which students might not pass a state test is to conduct universal screening assessments. These tests are usually given 3 times a year in the fall, winter, and spring. Given that the most recent federal education law, the Every Student Succeeds Act (ESSA), requires annual assessments of all students in grades 3 through 8 and once in high school, it is unlikely that state-level tests will go away soon (U.S. Department of Education). Therefore, educators at the local level will benefit from understanding how to use screening assessments during the school year to identify students who need additional instruction in order to pass the state assessment.

Universal Screening

Screening assessment involves testing all students with a brief, but predictive, measure of key skills. Typically such screenings are done 3 times a year with elementary grade students and less frequently at older grades. By screening all students, teachers can learn which students are struggling the most. This information can be used to implement additional instruction (also called intervention). There are a number of universal screening assessments available and they include both computer adaptive tests (CAT) and curriculum-based measures (CBM). Adaptive assessments are administered on a computer and adapt the questions to each individual student’s answers on prior questions. This is helpful because it shows each student’s current skill level. CBM are shorter measures that are usually timed and show how automatic a student is with a given skill. The Formative Assessment System for Teachers™ (FAST™) from FastBridge Learning has both adaptive assessments and CBM for reading and math that can be used to screen all students.

In order for screening scores to be helpful in predicting which students are likely to pass an upcoming state assessment, the screening data must be analyzed in relation to the scores the same students obtained on their state test. FastBridge Learning has conducted analysis of both its reading and math screening assessments and confirmed that these measures are predictive of students’ state assessment scores. The specific reading measures analyzed were earlyReading, aReading, and CBMreading. Both aReading and CBMreading scores were found to correlate strongly with the state tests in Georgia, Massachusetts, Minnesota, and Wisconsin. In addition, data from Wisconsin showed that earlyReading scores correlated strongly as well. Using criteria from the National Center for Response to Intervention (NCRTI), the analyzed data indicated that 62% of the scores were convincing evidence of predicting the state test score. An additional 32% of scores provided partially convincing evidence of predictability. Findings for math scores were similar. aMath scores were shown to be predictive of state test scores in Georgia and Minnesota, and earlyMath scores were predictive of state test scores in Colorado, Illinois, Indiana, Iowa, Massachusetts, Minnesota, New York and Vermont.

Not Waiting to Fail

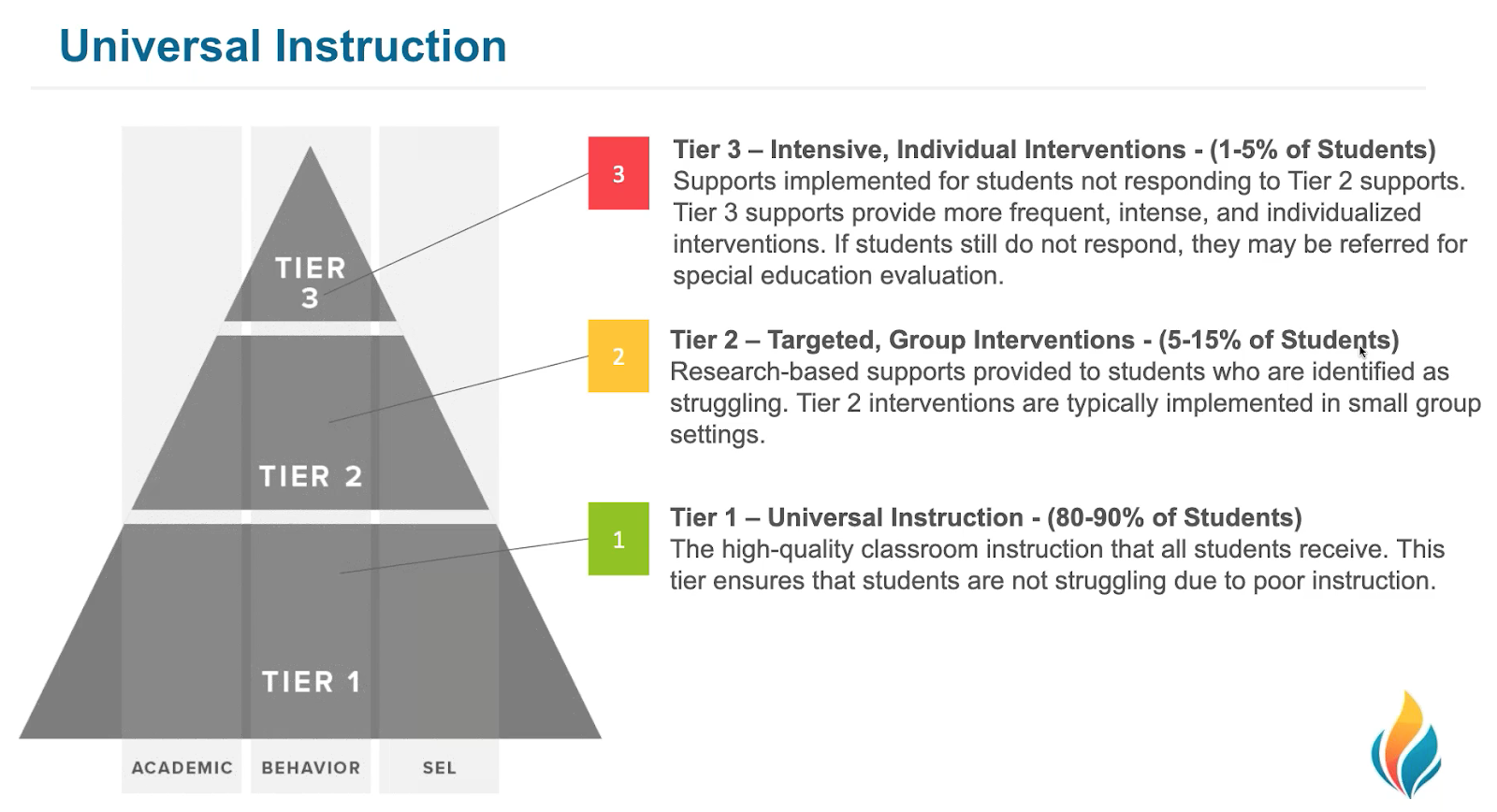

The reason to use universal screening assessments is to identify which students need additional help to be successful in school. An added benefit of screening is that such information can be used to predict student performance on state assessments. Prior to the use of screening assessments students would have to “wait to fail” before they might be eligible for help. And, even after a student failed one or more state tests, there was no guarantee that assistance would be provided. Thanks to a shift in how tests are used in schools, students no longer must wait to fail. The shift in practice is the result of using a multi-tier system of support (MTSS). With an MTSS, all students are screened to learn which ones might need more help. The screening data are compared to other sources of information about the students to confirm which students need assistance. Those students identified as needing extra instruction are then provided with regular additional lessons and monitored with progress measures. It might not be possible for every student to pass the state test, but more are likely to succeed if they are given extra instruction earlier in the year. Universal screening of all students can show which students need additional instruction to meet the state’s learning goals. Using screening methods that have been identified as predictive of state tests is an important way to make sure that students do not have to wait to fail.

References

Common Core State Standards Initiative. (2016). Standards in your state. Retrieved from: http://www.corestandards.org/standards-in-your-state/

U.S. Department of Education. (2016). Every student succeeds act. Retrieved from: http://www.ed.gov/essa?src=rn

U.S. Department of Education. (2016). Race to the top assessment program. Retrieved from: http://www2.ed.gov/programs/racetothetop-assessment/awards.html

Dr. Rachel Brown is Associate Professor of Educational Psychology at the University of Southern Maine and also serves as FastBridge Learning's Senior Academic Officer. Her research focuses on effective academic assessment and intervention, including multi-tier systems of support.